Birmingham’s Extreme Robotics Lab is already market-leading in many of the components that are needed for the increasing efforts to roboticise nuclear operations.

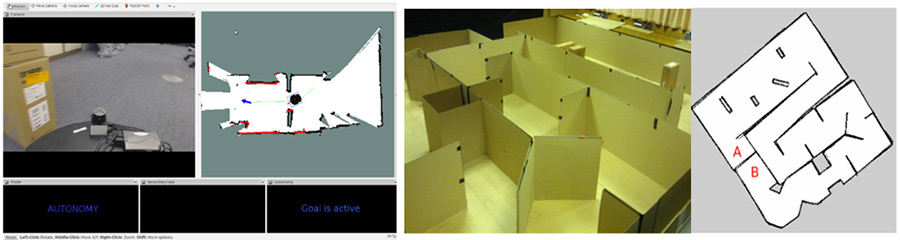

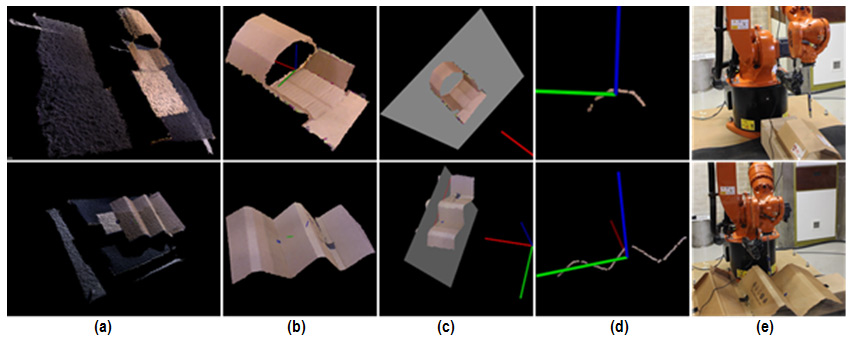

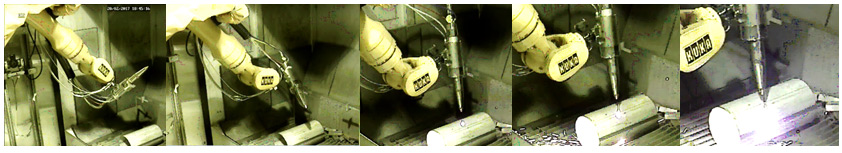

We have already demonstrated autonomous grasping of waste simulants (Fig. 1) and autonomous laser cutting of radioactive materials (Fig. 3,4) inside UK nuclear sites. We have also already developed a state-of-the-art system for navigation of remote robot vehicles (Fig. 2) and state-of-the-art real-time 3D characterisation with automatic recognition and labelling of materials and objects in scenes (Fig. 5).

While the highly conservative nuclear industry is justifiably wary of autonomous robot control methods, the overwhelming scale of the decommissioning problem (4.9million tonnes of nuclear waste in UK alone) demands increased automation. Furthermore, many complex tasks (remotely cutting, grasping, manipulating) can be extremely difficult, slow or even impossible using conventional methods of direct joystick control via CCTV camera views. We address these issues by the use of “semi-autonomy” (human makes high level decisions, which AI then plans and executes automatically), “variable autonomy” (human can switch between autonomous and direct joystick control modes), and “shared control” (human tele-operates some aspects of motion, e.g. moving arm towards/away with object, while AI controls others automatically, e.g. orienting gripper with object).

Fig 1 above, Extreme Robotics Lab augmented reality interface for semi-autonomous grasping (human-supervised autonomy), demonstrated on the RoMaNS industrial robot testbed at the NNL Workington nuclear industry site.

Fig 1 above, Extreme Robotics Lab augmented reality interface for semi-autonomous grasping (human-supervised autonomy), demonstrated on the RoMaNS industrial robot testbed at the NNL Workington nuclear industry site.

- Raw CCTV view of the scene, displayed to the human operator.

- Augmented view with projected point-cloud captured by the robot’s wrist-mounted RGB-D camera.

- The AI segments salient objects/parts from the background. Non-background objects are denoted by grey points, while the autonomous agent uses red points to suggest the most easily graspable objects/parts in the scene (computed by a state-of-the-art machine-learning based autonomous grasp planner).

- The agent suggests the best grasp (maximum likelihood grasp) to the human operator, by showing a virtual projection of the gripper (yellow) superimposed on the CCTV video feed.

- After the human operator approves the simulated grasp, the real grasp is executed by the robot.

Fig. 2 (above). MoD-sponsored variable autonomy robot vehicle navigation system. Left, the control interface showing CCTV video and 2D map being automatically created in real-time by robot’s on-board sensors, while simultaneously localising the robot in the map. The human can directly teleoperate the robot via joystick, or can mouse-slick waypoints on the 2D map, which the robot then navigates to autonomously. The human can switch between joystick and autonomous control modes at any time. Right, a maze-like testing arena and the 2D map that was automatically generated by the robot’s sensors and SLAM (simultaneous localisation and mapping) algorithms.

Fig. 2 (above). MoD-sponsored variable autonomy robot vehicle navigation system. Left, the control interface showing CCTV video and 2D map being automatically created in real-time by robot’s on-board sensors, while simultaneously localising the robot in the map. The human can directly teleoperate the robot via joystick, or can mouse-slick waypoints on the 2D map, which the robot then navigates to autonomously. The human can switch between joystick and autonomous control modes at any time. Right, a maze-like testing arena and the 2D map that was automatically generated by the robot’s sensors and SLAM (simultaneous localisation and mapping) algorithms.

Fig. 3 (above). In-lab testing of autonomous laser-cutting, with various different object shapes, and dummy laser-pointer, commercialised via ERL’s spin-out company A.R.M Robotics Ltd

Fig. 3 (above). In-lab testing of autonomous laser-cutting, with various different object shapes, and dummy laser-pointer, commercialised via ERL’s spin-out company A.R.M Robotics Ltd

- The robot moves the camera to multiple viewpoints, and reconstructs 3D model of the scene.

- The AI automatically segments the foreground object from background points.

- The human operator selects a cutting plane using two mouse-clicks.

- The AI automatically selects the points along the surface to be cut, and plans a trajectory for the laser, including desired laser stand-off distance and automatically maintaining laser axis orthogonal to the local surface curvature throughout.

- The AI automatically plans a collision free trajectory of the robot’s joints to deliver the laser along the cutting trajectory.

Above, fig 4, ARM Ltd vision-guided, AI control of robotic laser cutting of curved steel surface in real radioactive cave at NNL Preston.

Above, fig 4, ARM Ltd vision-guided, AI control of robotic laser cutting of curved steel surface in real radioactive cave at NNL Preston.

Above, fig 5.